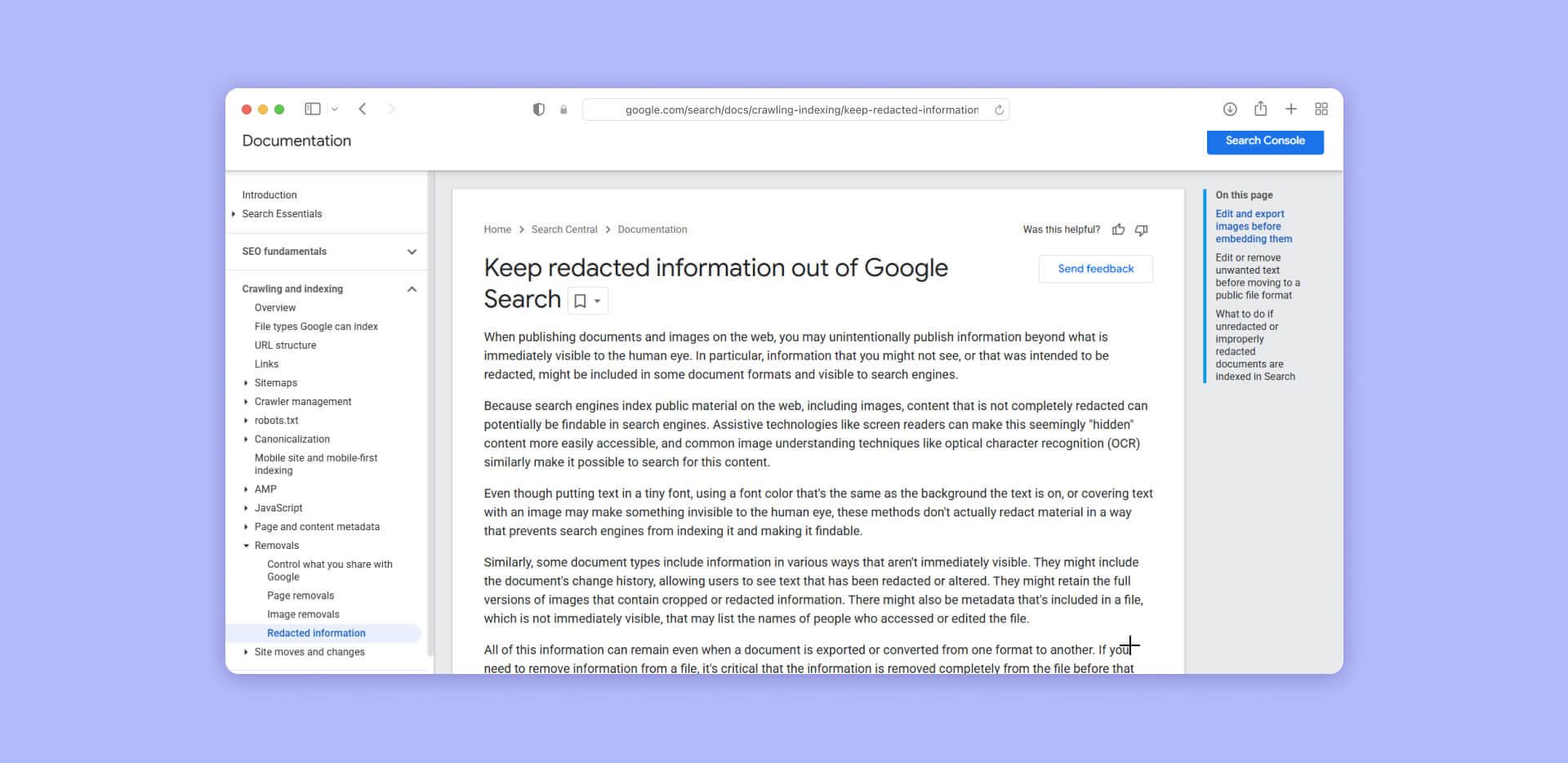

Google published guidance warning publishers that "hidden" information in documents isn't actually hidden from search engines. Their crawlers extract data you can't see - metadata, image layers, revision histories—and make it searchable. This guide breaks down Google's technical recommendations for actually removing sensitive data before it appears in search results.

Web search crawlers are finding your sensitive data.

Not because they're designed to breach security. Because most people don't realize what "publicly accessible" actually means to a search engine.

Google’s official documentation addresses a widespread problem: sensitive information appearing in search results because publishers don't understand how search indexing actually works. The guidance is direct and technical, aimed at preventing the kind of exposure incidents that turn into front-page news stories.

This is a real and widespread problem that barely anyone understands. A law firm's confidential client list shows up in search results. A hospital's patient identifiers become discoverable through simple queries. A government agency's internal budget details leak through documents that looked clean on screen but contained hidden data layers.

The guidance explains exactly what search crawlers see that you don't, and how to actually remove it.

What Google actually crawls and indexes

Google's crawlers index every publicly accessible file format on the web. PDFs, Word documents, Excel spreadsheets, PowerPoint presentations, images - if it's reachable through a public URL, it gets analyzed and potentially added to search results.

The crawlers extract more than what you see on screen. They analyze document metadata, file properties, embedded images, text layers in PDFs, revision histories, comments, hidden sheets in spreadsheets, and more. Screen readers and OCR technologies can access this same hidden content, which means anything searchable by assistive technologies becomes searchable by Google.

Google explicitly warns about several scenarios where information appears private but remains fully indexable:

- Text in tiny fonts or fonts matching the background color stays in the document's text layer and gets indexed normally.

- Images covered by shapes or rectangles retain their original content in embedded image data.

- Documents with change history enabled preserve deleted or altered text in revision metadata.

- Cropped images embedded in documents often include the full uncropped original in the file structure.

- Metadata fields contain author names, edit timestamps, and organizational information.

The technical reality: if data exists anywhere in a file's structure, Google's crawlers can find it. Visual appearance matters to human readers. File structure matters to search engines.

Google's guidance on image handling

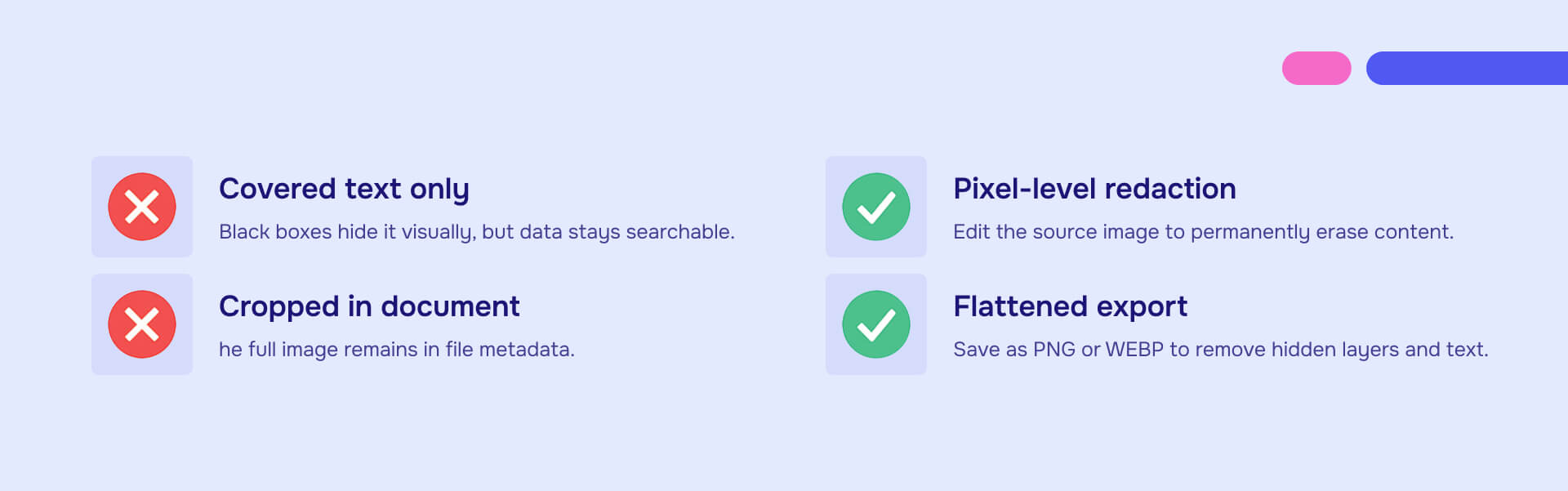

Google's first recommendation focuses on images because image redaction failures are both common and severe. The core principle: edit images before embedding them in documents, never after.

Their specific requirements:

- Crop images at the source file level to permanently remove unwanted information. Many document editors maintain the full uncropped original even when the document only displays a cropped portion. Review your tool's documentation to verify whether cropping actually removes data or just hides it from view.

- Remove or obscure text completely using pixel-level editing because OCR systems extract text from images regardless of visual coverings. This means actually editing the image pixels, not placing a shape over the text.

- Strip metadata from images before embedding. Image files carry EXIF data including GPS coordinates, camera information, timestamps, and editing software details.

After making all edits, export images as non-vector, flattened formats. Google specifically recommends PNG or WEBP. Vector formats like SVG can contain hidden layers and text objects that remain accessible even when not displayed.

Why this matters:

Google Search indexes images separately from the documents containing them. An image properly redacted within a document viewer may still expose sensitive information when Google indexes the underlying image file and displays it in image search results. The redaction must exist in the image file itself, not just in how the document displays it.

Google's guidance on document formats and text

Before generating a public document, Google recommends removing unwanted text entirely and moving to formats that don't preserve change history.

Their specific requirements:

Use proper document redacting tools rather than placing black rectangles over text using design tools, or PDF editors. Google explicitly calls out this common mistake—the covered text remains in the file's text layer and gets indexed normally.

Double-check document metadata in the final public file. Author names, revision counts, comments, and organizational information all get indexed along with visible content.

Follow format-specific redaction best practices. Google links to general guidance but emphasizes that PDF, image, and document formats each have unique requirements for proper data removal.

Consider information in URLs and file names themselves. Even URLs blocked by robots.txt may get indexed (without their content). Use hashed values in URL parameters instead of email addresses or names.

Consider authentication to limit access to sensitive content. Serve login pages with a noindex robots meta tag to prevent the authentication page from appearing in search results.

The robots.txt caveat:

Google makes an important technical point: robots.txt blocks search crawlers from accessing page content, but URLs may still appear in search results. A file at example.com/reports/john-smith-ssn-123-45-6789.pdf might have its content blocked, but the URL itself becomes searchable, exposing the name and partial SSN in the file path.

Metadata removal requirements

Metadata presents one of the most overlooked exposure risks because it remains invisible during normal document viewing. Google's crawlers analyze file properties as thoroughly as visible content.

Common types of metadata that may be exposed include:

- Author names and organizational affiliations

- Creation and modification timestamps

- Software versions used to create the file

- Edit histories showing who accessed or changed the document

- Revision counts indicating how many times sensitive sections were modified

- Comments and annotations marked as resolved but still embedded

- Custom document properties with internal classification codes

Microsoft Office applications include built-in metadata removal tools under "Inspect Document" features. Adobe Acrobat provides "Remove Hidden Information" tools that scan for and eliminate various metadata types. Both require you to remember to use these features each time—metadata doesn't automatically clear when you export or save files. Platforms like Redactable automatically strip all metadata every time you redact a document, eliminating the risk of forgetting about this.

The most reliable approach: export to flattened final formats after completing all edits and redactions. PDF/A formats designed for archiving eliminate many complex document structures that can harbor metadata. Rasterized image formats contain no text layers or revision histories.

Always verify metadata removal by inspecting the final file's properties before publication. Metadata persists through format conversions if not explicitly removed during the export process.

URL and file naming practices

File names and URL structures become part of Google's index, creating exposure risks that persist even when document content is properly protected.

Google's specific guidance:

Never include email addresses, names, identifiers, or other sensitive information in URLs or file names. Even when robots.txt blocks the document content from being crawled, the URL itself may be indexed and appear in search results.

Use hashes or generic identifiers instead of meaningful names in URL parameters.

Examples of problematic versus safe naming:

❌ documents/patient-john-doe-medical-record.pdf

❌ reports/settlement-confidential-2024.pdf

❌ files?email=attorney@lawfirm.com&case=12345

✅ documents/record-8a7f3b2c.pdf

✅ reports/case-pub-047.pdf

✅ files?id=9c2e5f1a

This applies to directory structures too. URLs like example.com/clients/smith-corp/confidential/ expose the client relationship and classification level even if the directory listing is blocked.

Google Search Console for emergency response

Google strongly recommends verifying your website in Google Search Console before problems occur. Verification enables access to removal tools that process requests within one day for verified site owners versus the weeks required for standard recrawling updates.

The Removals tool allows verified owners to urgently remove URLs from search results. For multiple documents sharing a URL pattern, URL prefix removal can clear entire directories at once.

This matters because discovery of exposed sensitive information requires immediate action, not waiting for natural search index updates that may take weeks or months.

Google's incident response procedures

When improperly prepared documents are already indexed in Search, Google provides specific recovery steps:

1. Remove the live document immediately

Take down the file from its current URL. This prevents new exposure while you work through the removal process.

2. Use the Removals tool for verified sites

Submit removal requests through Google Search Console. Verified site removals typically process within one day. Use URL prefix removal for multiple affected documents.

3. Host properly prepared documents at new URLs

Upload corrected versions to different URLs than the originals. New URLs enter the index with only current content, avoiding confusion with cached versions of old URLs. Recrawling and updating existing URLs takes significantly longer than indexing new ones.

4. Contact third-party sites hosting copies

Check whether other websites, forums, or document platforms host copies of the exposed files. Request removal from those site administrators.

For URLs you don't control, use Google's Outdated Content tool to request that Google recrawl and update cached versions.

5. Monitor removal request status

URL removal requests expire after approximately six months or after Google confirms the URL no longer contains the previously indexed content. Monitor Search Console to ensure removals remain active during the transition period.

The visual redaction problem

Google's guidance touches on a fundamental technical issue: methods that make information invisible to human viewers often leave data fully accessible to search systems.

Common approaches that fail:

- Placing black rectangles or shapes over text, or images

- Setting font colors to match backgrounds, or setting to 0% opacity

- Covering content with other images or objects

- Forgetting about sensitive data beneath layers in the PDF

- Cropping images using document editor tools rather than image editors

These methods change how documents display without changing what data they contain. The underlying text stays in the file structure. Search crawlers, screen readers, and OCR systems extract that text regardless of visual presentation.

True data removal requires using tools that actually delete information from file structures, not just hide it from view. Adobe Acrobat Pro's redaction feature, Microsoft Purview Information Protection, and other professional tools permanently remove data rather than masking it.

For organizations handling sensitive documents at scale - legal discovery, FOIA requests, medical records, financial filings—manual redaction creates bottlenecks. Automated platforms like Redactable combine AI-powered detection of 50+ sensitive data categories with permanent removal that satisfies Google's indexing requirements while reducing redaction time by 98% compared to manual processes.

Pre-publication checklist

Before making any document publicly accessible:

☐ Edited images at source file level before embedding

☐ Exported images to flattened PNG or WEBP formats

☐ Removed all unwanted text using proper redaction tools

☐ Stripped document metadata completely

☐ Verified file names and URLs contain no sensitive information

☐ Tested final document by attempting to select "hidden" text

☐ Inspected file properties to confirm metadata removal

☐ Verified site in Google Search Console for emergency access

☐ Reviewed that file access level (private/public) matches intent

Implementing Google's recommendations

Google's guidance boils down to a technical reality: search crawlers index file structures, not just visual presentation. Data that exists anywhere in a file can be extracted and indexed, regardless of whether human viewers can see it.

The recommendations require understanding how document formats work at a structural level. PDFs have text layers separate from visual display. Images have embedded metadata. Documents preserve edit histories. URLs become indexed independently of the content they point to.

Prevention proves far more effective than remediation. Once sensitive data enters search results, complete removal becomes difficult and time-consuming. Properly preparing documents before publication—actually removing data rather than hiding it, cleaning metadata, using appropriate file formats, and sanitizing URLs—prevents exposure incidents rather than trying to fix them after they occur.

For detailed technical specifications and updates, refer to Google's official documentation on keeping information out of search results.