In October 2024, a single "Ctrl+C" command accomplished what years of federal investigations couldn't: it unmasked more than 30 pages of TikTok's most guarded internal secrets.

Kentucky's Attorney General filed a 119-page complaint against TikTok, joining 13 other states in alleging the platform deliberately designed addictive features targeting children. The legal team attempted to hide the most damaging evidence - internal documents, executive communications, research data - behind black redaction boxes as required by confidentiality agreements with TikTok.

The redactions were fake. Kentucky Public Radio discovered they could copy and paste the supposedly hidden text, revealing internal TikTok communications that executives never intended for public view.

What emerged wasn't just a TikTok privacy concern. The leaked documents exposed a company that allegedly knew exactly how to addict teenagers, deliberately manipulated beauty standards through algorithmic amplification, designed safety features as PR tools rather than protective measures, and maintained leakage rates as high as 100% for some categories of harmful content involving minors.

The redaction failure transformed a routine state enforcement action into a corporate transparency crisis - and demonstrated that even government legal teams prosecuting privacy violations don't understand the difference between visual masking and permanent data removal.

The smoking gun documents TikTok tried to hide

The improperly redacted Kentucky filing revealed internal TikTok research and executive communications across multiple damning areas. These weren't allegations from regulators - these were TikTok's own words about its own practices.

The "260 videos to addiction" formula

TikTok's internal research determined the precise threshold for forming user habits: 260 videos. Given that TikTok videos run 8-15 seconds and play in rapid-fire auto-advance succession, that threshold can be reached in approximately 35 minutes.

"After 260 videos, a user is likely to become addicted to the platform," according to internal documents exposed in the filing. The company didn't just study addiction patterns - it quantified exactly how quickly users could be hooked and optimized its features accordingly.

Additional research showed TikTok understood the consequences: "compulsive usage correlates with a slew of negative mental health effects like loss of analytical skills, memory formation, contextual thinking, conversational depth, empathy, and increased anxiety," according to the company's own studies cited in the lawsuit.

Internal documents also acknowledged that "compulsive usage interferes with essential personal responsibilities like sufficient sleep, work/school responsibilities, and connecting with loved ones." TikTok knew the harm its design created and built the product that way regardless.

The beauty algorithm: amplifying "attractive" users, demoting others

One of the most disturbing revelations involved TikTok's algorithmic manipulation of beauty standards. Internal analysis found that TikTok's main video feed contained "a high volume of … not attractive subjects."

The company's response wasn't to accept diverse body types and appearances. TikTok retooled its algorithm to amplify users the company deemed beautiful while suppressing those it classified as "not attractive."

"By changing the TikTok algorithm to show fewer 'not attractive subjects' in the For You feed, TikTok took active steps to promote a narrow beauty norm even though it could negatively impact their Young Users," Kentucky authorities wrote based on the internal documents.

This wasn't passive content curation. TikTok actively intervened to enforce specific beauty standards through algorithmic amplification, knowing the psychological harm this could cause to young users already vulnerable to body image issues and self-esteem problems.

Screen time limits designed for PR, not protection

TikTok publicly promoted its 60-minute screen time limit as a safety feature for teens. Internal documents revealed the feature was designed as a public relations tool, not a genuine protective measure.

Company communications showed TikTok measured the success of time-limit features by whether they were "improving public trust in the TikTok platform via media coverage" - not by whether they actually reduced teen screen time.

Testing revealed the 60-minute prompt had minimal impact, accounting for approximately a 1.5-minute reduction in daily usage. Teens spent around 108.5 minutes per day before the feature and roughly 107 minutes after. According to the exposed documents, TikTok did not revisit the issue or attempt to make the feature more effective.

One TikTok project manager stated explicitly in internal communications: "Our goal is not to reduce the time spent." Another employee echoed this, noting the actual goal was to "contribute to DAU [daily active users] and retention."

The company's "break" videos - prompts encouraging users to stop scrolling - received similar internal assessment. One executive acknowledged they were "useful in a good talking point" with policymakers but "they're not altogether effective."

Read also: Redacted Epstein files: Here is what went wrong (December 2025)

Executive awareness of harm to children

Perhaps most damaging were communications showing TikTok executives clearly understood the app's impact on child development and chose engagement over safety.

One unnamed TikTok executive described the tradeoff in stark terms: "I think we need to be cognizant of what it might mean for other opportunities. And when I say other opportunities, I literally mean sleep, and eating, and moving around the room, and looking at someone in the eyes."

The executive explicitly acknowledged that TikTok's algorithmic power deprived children of fundamental developmental experiences - not as an unintended side effect, but as a known consequence of the product's design.

Content moderation failures at scale

The redacted documents also exposed TikTok's content moderation inadequacies. The company internally acknowledged substantial "leakage" rates - percentages of violating content that escaped removal despite policies prohibiting it.

According to the leaked internal data:

- 35.71% leakage rate for "Normalization of Pedophilia"

- 33.33% for "Minor Sexual Solicitation"

- 39.13% for "Minor Physical Abuse"

- 30.36% for "leading minors off platform"

- 50% for "Glorification of Minor Sexual Assault"

- 100% for "Fetishizing Minors"

These weren't external estimates or regulatory allegations. TikTok's own internal measurement systems showed that in some categories - particularly those involving sexualization of children - the company failed to catch any violations.

How the redaction failure happened

The Kentucky Attorney General's office filed the complaint in October 2024 as part of a coordinated multi-state action against TikTok. The filing included confidential TikTok materials obtained through the investigation - internal documents, research studies, executive communications, and data the company considered trade secrets.

Under confidentiality agreements and standard court procedures, these sensitive materials required redaction before public filing. The legal team applied black boxes over the confidential content, creating what appeared to be a properly redacted document with protected information hidden from view.

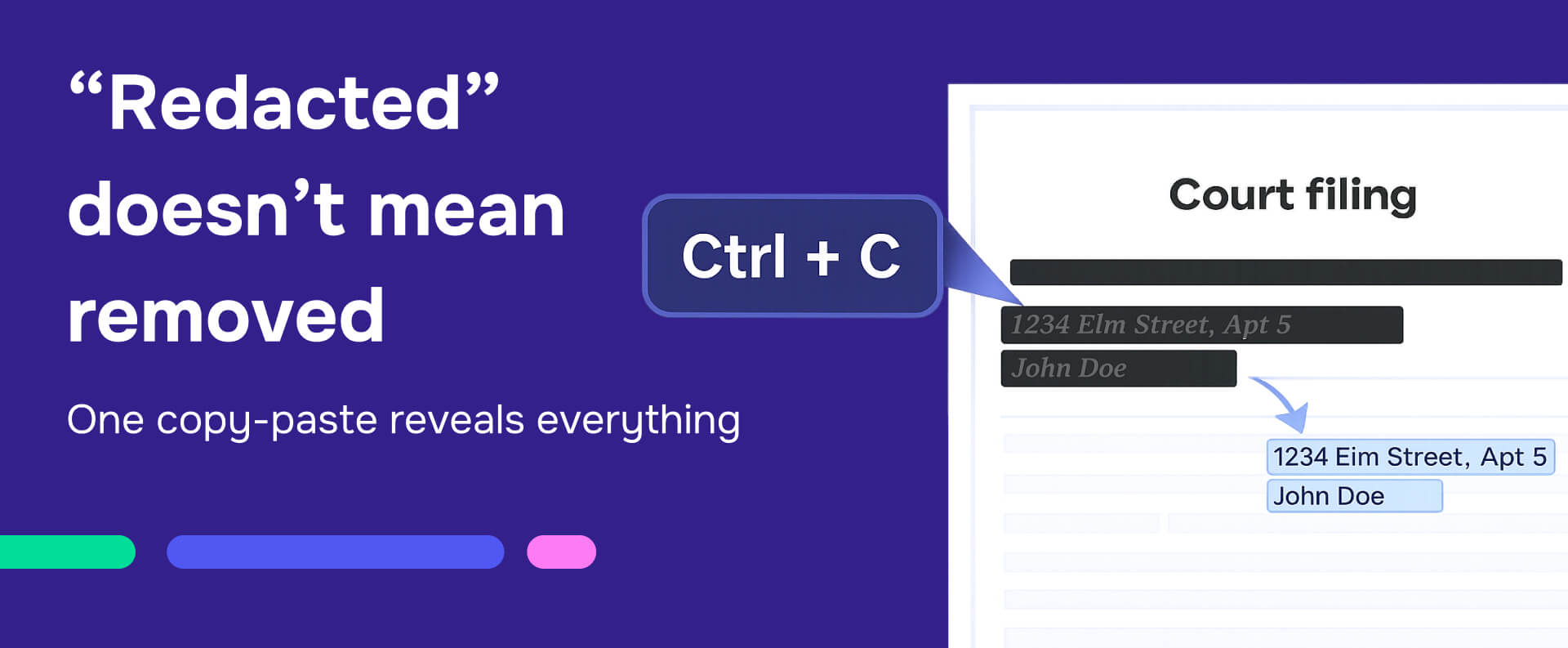

But the redaction was cosmetic only. When Kentucky Public Radio reporters downloaded the public court filing and attempted to copy text for their reporting, they discovered the "redacted" material was fully selectable and copyable. The black boxes were visual layers placed on top of existing text - not permanent removal of the underlying data.

By copying and pasting sections that appeared blacked out, reporters recovered more than 30 pages of internal TikTok secrets that were supposed to remain confidential. Once Kentucky Public Radio published excerpts, the information spread across news organizations nationally.

A state judge subsequently sealed the entire complaint following an emergency motion from the Attorney General's office "to ensure that any settlement documents and related information, confidential commercial and trade secret information, and other protected information was not improperly disseminated."

But the damage was permanent. The documents had already been copied, archived, and reported. The seal came too late to contain information that was now part of the public record through journalism and internet archives.

The technical failure behind fake redaction

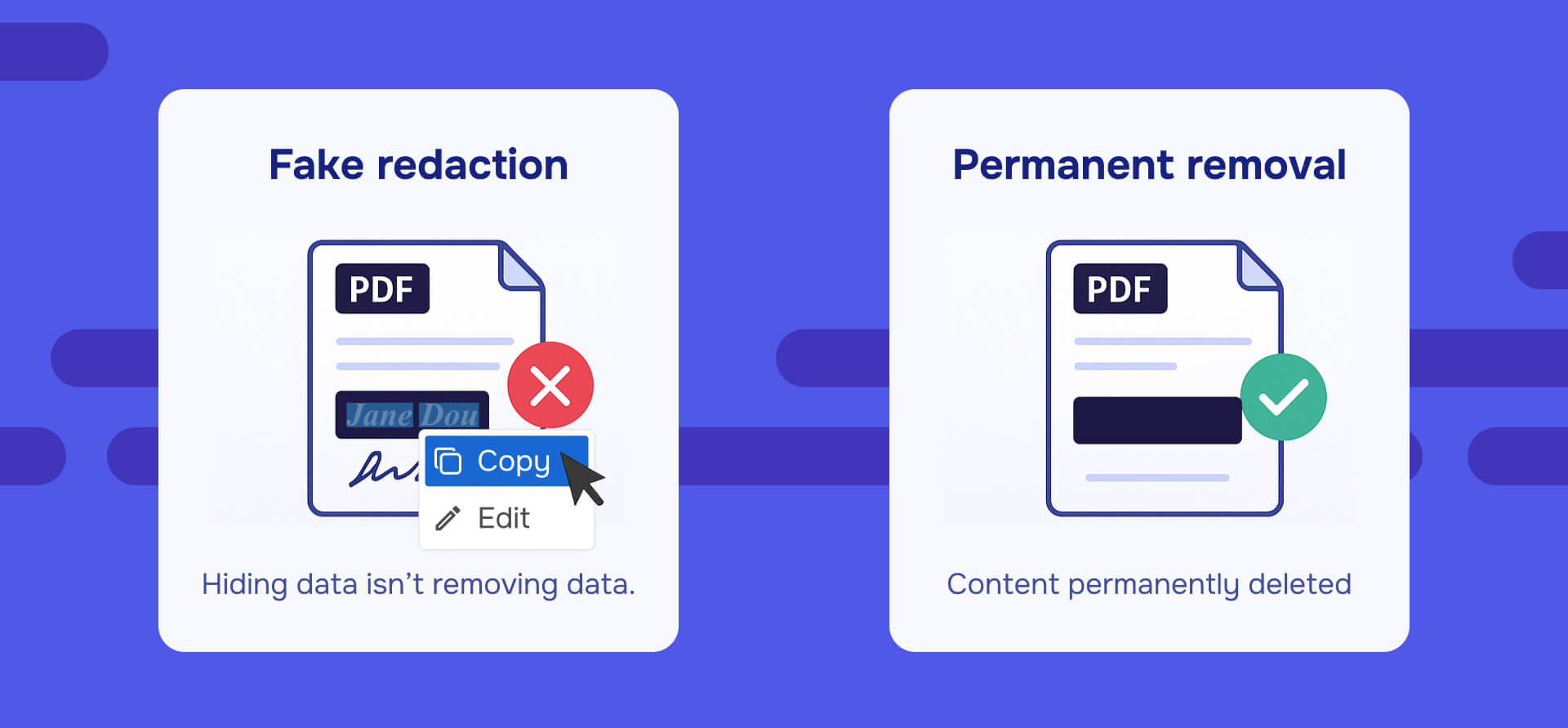

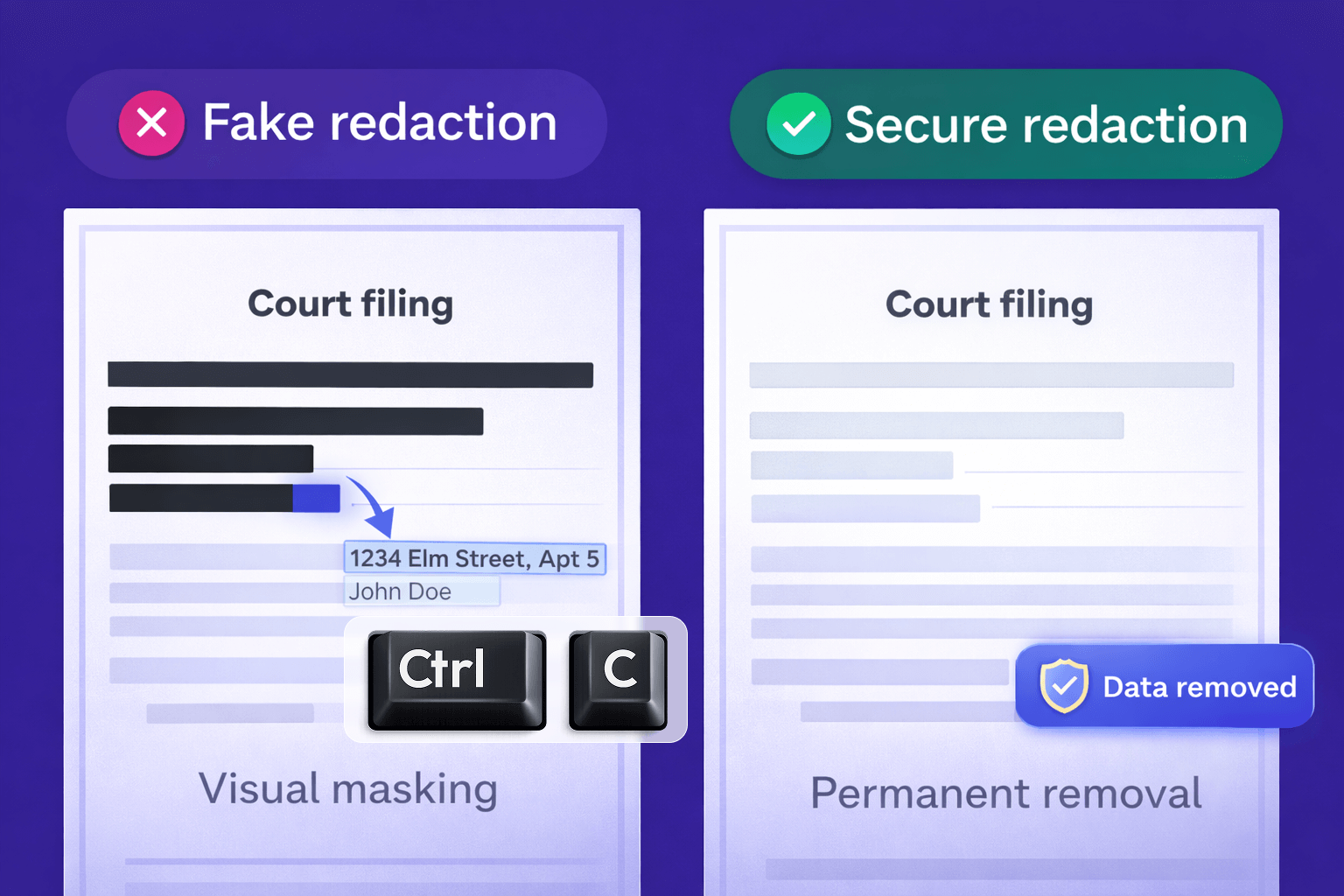

The Kentucky redaction failure demonstrates the most common technical mistake in document security: confusing visual obfuscation with data removal.

Legal and compliance professionals often use annotation tools - available in Adobe Acrobat, PDF editors, and word processors - to place black rectangles or shapes over sensitive text. These tools work like drawing layers: they add visual elements on top of the document without modifying the underlying content.

When documents are "redacted" this way:

The original text remains in the file structure - fully searchable, selectable, and copyable by anyone who downloads the PDF.

Metadata preservation - information about document authorship, creation dates, revision history, and edit tracking often remains intact even when visual content appears hidden.

Layer removal - some PDF viewers allow users to toggle visibility of annotation layers, revealing "redacted" content without any technical sophistication.

Text extraction - opening the PDF in a text editor or using automated extraction tools recovers all underlying text regardless of visual appearance.

Copy-paste vulnerability - as Kentucky Public Radio discovered, simply selecting and copying "redacted" areas often captures the hidden text.

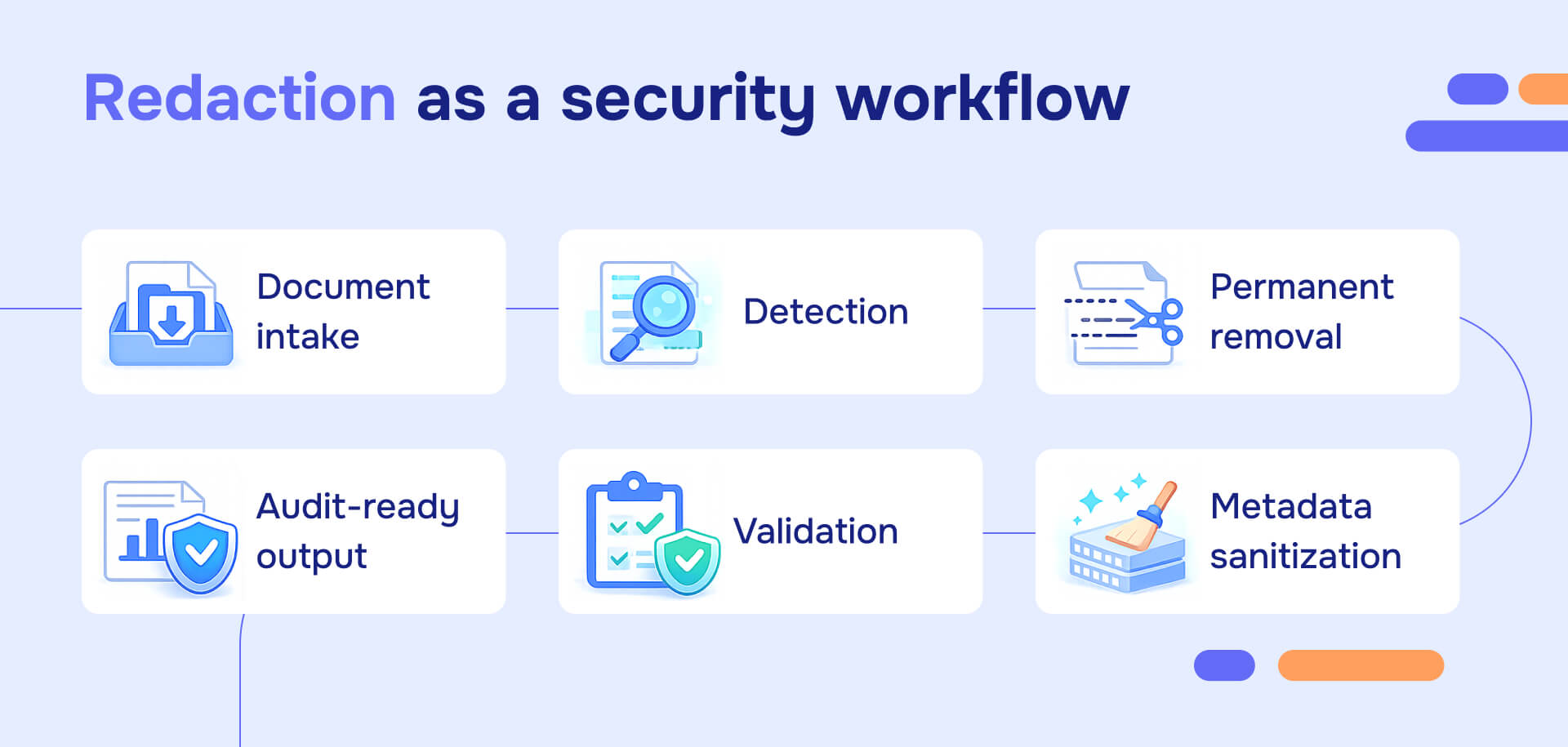

Proper redaction requires permanent removal of sensitive data from file structures, not just visual masking. Professional redaction tools accomplish this by:

- Deleting the actual text from the PDF's content stream

- Replacing sensitive data with blank space or redaction marks at the object level

- Stripping metadata and hidden information from the file

- Flattening all layers to prevent visibility toggles

- Generating audit trails documenting what was redacted and when

The difference isn't academic. Visual masking creates the appearance of protection while leaving data fully accessible. Permanent removal actually eliminates the information from the document.

Why this matters beyond TikTok

If state attorneys general offices with dedicated legal technology staff can't implement secure redaction, the technical gap exists everywhere. Understanding policy requirements ("we need to redact confidential information") doesn't translate to proper execution ("we need to permanently remove data from file structures").

TikTok entered confidentiality agreements expecting them to be honored. When redaction failed, trade secrets became permanently public. No subsequent sealing order could undo the exposure - once Kentucky Public Radio published the documents, they spread across news organizations, research databases, and internet archives. Legal agreements mean nothing if technical implementation fails.

The irony: Kentucky's lawsuit alleges TikTok failed to protect children's data and prioritized engagement over safety. Yet the state's own document handling exposed TikTok's secrets through the same technical failure - removing visual access without removing underlying data. Both TikTok's alleged deletion failures and Kentucky's redaction failures stem from the same misunderstanding about hiding versus removing data.

Corporate secrets worth billions became public through a mistake that cost $0 to exploit: copying and pasting from a publicly filed court document.

What the documents reveal about TikTok privacy concerns

The 260-video addiction threshold wasn't incidental - TikTok studied user behavior to understand how quickly habits form, then optimized features to reach that threshold efficiently. Rapid-fire auto-advance, algorithmic personalization, and infinite scroll were engineered responses to research showing how to hook users.

The company actively adjusted its algorithm to promote "attractive" users while suppressing others, deliberately amplifying content reinforcing narrow beauty standards for teenagers dealing with body image issues.

The 60-minute screen time limit reduced usage by 90 seconds while generating positive media coverage - safety theater measured by PR impact, not effectiveness. Internal research documented mental health effects and developmental disruption, yet the business model required maximizing time on platform regardless of consequences.

Content moderation showed 100% leakage rates for fetishization of minors - TikTok's systems caught zero instances of that content type. These weren't occasional failures; they were systematic gaps in protecting the most vulnerable users.

Read also: Epstein files: Why they should have used Redactable

The broader pattern: children's data remains unprotected

The exposed documents show TikTok knew it was collecting data from users under 13, built features to hook young users, and designed around child safety requirements rather than implementing them. Even when authorities prosecute these violations, their own document handling fails - the Kentucky filing removed visual access to confidential information while preserving data in file structures, exactly like TikTok's alleged deletion failures. Both stem from the same misunderstanding: hiding data isn't removing it.

Building real redaction into workflows

Organizations handling sensitive documents - whether in litigation, compliance, customer service, or any context requiring confidentiality - need systematic approaches that actually remove data rather than visually hiding it.

Use professional redaction tools, not general PDF editors: Standard PDF annotation tools, word processor drawing features, and screenshot-based workflows don't remove underlying data. Professional redaction software permanently deletes sensitive information from file structures while maintaining document integrity. Legal technology experts emphasize this distinction as the critical difference between cosmetic redaction and secure redaction.

Implement the copy-paste test: Before sharing any supposedly redacted document, attempt to copy and paste from "redacted" areas. If text appears, the redaction failed. This $0 test catches the exact failure that exposed TikTok's secrets and takes seconds to perform.

Remove sensitive data at the source: The most secure redaction happens before documents are created. Use internal identifiers instead of names, reference case numbers rather than personal details, and generate reports that never contain sensitive data in the first place. Data that never enters documents doesn't need to be redacted.

Validate metadata removal: Redaction must extend beyond visible content to metadata - document properties showing author names, revision history, comments, tracked changes, and embedded objects. Metadata has exposed confidential information in countless cases despite proper visible redaction.

Generate audit trails for redaction: Professional tools like Redactable create certificates documenting what was redacted, when, by whom, and through what process. These audit trails prove that permanent data removal occurred rather than visual masking - exactly the evidence needed when redaction integrity is questioned.

Train teams on technical requirements: The gap between "we need to redact" (policy understanding) and "we need to permanently remove data from file structures" (technical understanding) exists across organizations. Training must address both what needs redaction and how to implement it securely.

Treat redaction as security infrastructure: Proper redaction isn't a paralegal task or administrative function - it's a security control protecting confidential information, trade secrets, personal data, and privileged communications. Organizations should approach redaction procedures with the same rigor applied to access controls, encryption, and other security measures.

The permanent consequences of temporary mistakes

TikTok condemned the disclosure as "highly irresponsible" and argued the documents were taken out of context. But the complaint wasn't under seal when Kentucky Public Radio accessed it - the emergency sealing motion came after publication. TikTok's objections couldn't undo the exposure.

The internal documents are now permanently archived. Future reporting will cite TikTok's own research on 260-video addiction thresholds, algorithmic suppression of "unattractive" users, and executive acknowledgment that the app deprives children of sleep and real-world interaction.

For Kentucky's Attorney General, the technical mistake undermined their case - TikTok gained grounds to argue improper disclosure, other states' coordinated strategy was compromised, and focus shifted from the defendant's violations to the state's document handling.

The lesson: redaction failures have permanent consequences no legal remedy can address. Visual masking creates appearance of protection while leaving data fully accessible. The gap between cosmetic compliance and actual security determines whether confidential information stays confidential.

The TikTok lawsuit 2024 aftermath

Attorney General Russell Coleman's lawsuit alleged TikTok "was specifically designed to be an addiction machine, targeting children who are still in the process of developing appropriate self-control."

The complaint charged that TikTok:

- Created a deliberately addictive content system to maximize time young users spend on the platform

- Designed features exploiting children's psychological vulnerabilities

- Engaged in deceptive marketing about platform risks

- Refused to address accessibility and spread of child sexual abuse material

Kentucky joined California, New York, Illinois, Louisiana, Massachusetts, Mississippi, North Carolina, New Jersey, Oregon, South Carolina, Vermont, Washington, and the District of Columbia in coordinated enforcement actions against TikTok's alleged role in worsening children's mental health.

The redaction failure didn't change the underlying legal claims, but it dramatically altered the information landscape surrounding them. Arguments that previously relied on investigative findings and regulatory analysis could now cite TikTok's own internal research and executive communications. The company's public statements about safety and wellbeing now faced direct contradiction from leaked internal documents.

For TikTok, the exposure created multiple new problems beyond the original lawsuit. Competitors gained insight into the company's research methodologies and product strategies. Policymakers obtained evidence for regulatory proposals. Parents and advocacy groups received documentation supporting claims about the app's impact on children.

Most significantly, the leaked documents will influence public perception of TikTok permanently. The company can dispute allegations, but it cannot dispute its own internal communications acknowledging addiction thresholds, algorithmic manipulation of beauty standards, and PR-focused safety features.

Stop using fake redaction tools

The Kentucky TikTok lawsuit exposed internal secrets worth billions through a technical mistake that cost nothing to exploit. TikTok's confidential research on addiction thresholds, algorithmic manipulation, and executive acknowledgments of harm became permanently public because a government legal team used drawing tools instead of proper redaction software.

The lesson is direct: stop using PDF annotation tools, word processor shapes, and screenshot-based workflows for redaction. These methods don't remove data - they temporarily hide it while leaving everything fully accessible to anyone who downloads the document.

Organizations must abandon risky practices and adopt professional redaction tools that:

- Permanently delete sensitive data from file structures - not visual layers that can be copied, pasted, or extracted

- Remove metadata and hidden information - author names, revision history, comments, embedded objects

- Generate audit trails - cryptographic proof documenting what was redacted, when, and by whom

- Support validation testing - confirming data is actually gone before documents are shared

The cost of proper redaction infrastructure is minimal compared to consequences of failure. TikTok's leaked documents will influence regulation and public perception for years. The Kentucky Attorney General's redaction failure compromised a multi-state enforcement strategy. Both parties learned the same lesson: declaring information "protected" means nothing without technical implementation that actually removes it.

Professional redaction isn't optional for organizations handling confidential documents, trade secrets, privileged communications, or personal data. It's the baseline requirement for document security in litigation, compliance, and data sharing contexts.

Visual masking created TikTok's exposure. Permanent removal prevents it. Choose tools accordingly.